Automated Valuation Machine - Transforming a real-estate business with new data services

Context

Our client is a major real-estate consulting firm, subsidiary of one of the largest banking group in France. Once protected from disruption by norms and standards, the company decided to put digital transformation on top of its strategic agenda, to proactively secure its leadership on a growingly technological market. As any historical establishment, our client’s organisation has inherited IT legacy from several mergers and acquisitions and decades-long practices and mindset. It needed a key partner to gain forward momentum, and deliver value fast and efficiently.

What did we do ?

The approach was to focus on two major value streams : boosting internal performance with new tools and processes, and extend their existing offer with new data-intensive services. As both streams required to set-up systems, we had to implement first a new infrastructure that would serve the requirements of both value streams, that would become the core enabler of a firm-wide innovation platform.

Solutions

Infrastructure stream : taking advantage of cloud platforms to foster innovation and boost time-to-market

We faced two major challenges to enable both value streams : delivering (really) fast to catch up the market, and optimising costs as much as possible in a context of fixed investment capacities. At the same time, the data-oriented architecture to be set-up would become a strategic asset in the long run. It was therefore an absolute necessity that our client – though not a tech expert – understand the key issues at stake, and own the solution. We deployed a very pragmatic and pedagogical approach to design the solution.

1. Understanding “data-oriented architectures” (1 week)

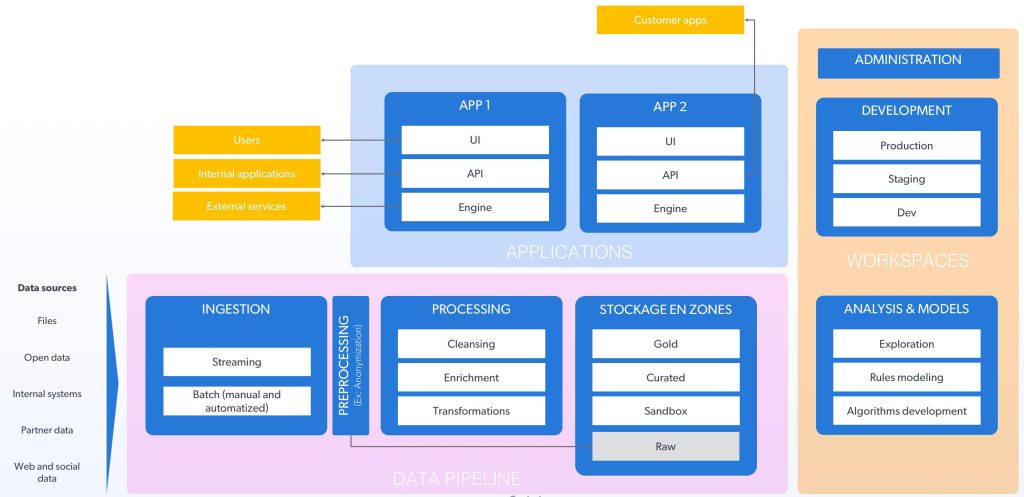

Our functional requirements and constraints came from four sources : the two value streams (below mentioned), our client’s capabilities (especially their level of autonomy at operating the platform), and security concerns. The uncertainty was mainly on the third source. As for designing a tool, we organised a series of workshop to first train the team on what to expect from a so-called “datalake” (and what not to expect); how does it work and how ones operate it :

- understanding datalake architectures through “Lego-inspired workshop”

- lifting the hood with a “hands-on session”

- mapping data sources with a “data brainstorm session”

- making key decisions during a “key issues workshop”

The main output of this intensive week, apart from client’s training, was a detailed functional scope for the architecture and main directions regarding its operating model.

2. Setting-up and testing prototypes of the future solution and operating model (4 weeks)

From the functional requirements, the team designed an encompassing architecture highlighting key strategic modules. From previous workshops, two main solution providers were shortlisted : Amazon Web Services and Google Cloud Platform. Going to the cloud was an intensively discussed issue. Given our go-to-market objectives, our client’s strategic context and cost constraints, it turned out to be the optimal scenario. To help the decision, we built up two prototypes, and tested key use cases in “real-life conditions”, mainly :

- data ingestion from various sources, batch and streaming, of multiple formats

- data processing (normalisation, validation, enrichment, etc.)

- data exploration and analysis

- data storage

- data governance

- orchestration

A special focus was also made on the choice of a mapping solution, as the rendering of data on a fancy map was a key success factor for our applications’ roll-out.

The output of this Proof of Concept was a documented technical recommendation (specific to our client’s context) and associated costs scenarios.

3. Deploying an architecture with Agile methodologies

We capitalised on the Proof of Concept to deploy the target solution, with a parallel scrum to other projects’. Data sources and associated processes are now prioritised based on their added value to both value streams. Devops, back-end developers and data engineers work hand-in-hand to ensure the data is there in the right format to serve other projects’ needs. Each sprint review serves as a training for our client, getting used to such an innovative and technical environment. They even started to explore themselves the data directly through the platform. Full transparency and pricing elasticity helped us reach a new level in our mutual confidence and deepened the nature of our partnership.

Value stream 1 : boosting internal performance with state-of-the-art digital tools

Our client’s first objective was to transform its operations. Real Estate consultancy is an expert business. As for many businesses of the kind, the value resides in people expertise and know-how, knowledge and processes being especially tacit.

Such practices have downsides. For experts, it implies to spend a lot of time re-inventing the wheel all the time, looking for data more or less to be trusted, and not capitalizing on the work of each other. It’s also a marketing and brand image issue, when competition is more and more equipped with fancy tools and services. For the organisation, it’s a real challenge in terms of knowledge management and adaptability.

Teams needed a new tool and practices to be more efficient and maintain the company market leadership in a moving environment. Given the transformational dimension of the project, we deployed a user-centric methodology to make sure 1/ businesses are involved in the project from its initialising phase and 2/ the tool really delivers value to its users from its very first release.

1. User-centric conception

To design new processes and the associated tool, we deployed user-centric methodologies, customised to our clients’ context :

- Interviews with future users from all directions within the organisation (all)

- Information architecture workshop

- Rapid wireframing

- Feedback collection from users on interactive mock-ups

- Wireframes update and artistic design (including logo and visual identity)

Our client was not necessarily used to such methodologies. Some showed sceptical first, but thanks to creative techniques, leadership support and systematic follow-up, even the most reluctant finally contributed in a most constructive way.

2. Product roadmap : designing the right “MVP”

One key challenge is often to know where to start. Designing a Minimal Viable (and likable) Product is a real challenge, as it has to bring value to users in its very early stages, and generate excitement for future versions rather than disappointment. Pretty easy on paper, but projects often have constraints. Time was our main constraint… The MVP had to be delivered within 6 months – including conception. We therefore conducted feature mapping workshops with our client to force prioritisation, and poker planning with the production team to evaluate every feature. At the cross-roads between the two lied our MVP, basically maximising value on key features while maintaining the delivery deadline.

3. Product roll-out and change management throughout the organisation

On-boarding businesses from the design phase and MVP prioritization was the first key principle to drive change. But as the new tool will impact every-day operations, designing an efficient roll-out program was also important. From our expertise in delivery management, we recommended (and will deploy) the following :

- regular communication from company’s leadership to emphasize the importance of such new practices for the organization

- celebrating the first release, together with very short training sessions on agile methodologies to raise awareness on the importance of getting feedback to make the product even better

- direct support for every user by the product team, to make sure we get direct feedback from real users

- monthly release notes including quick video tutorials showcasing new features and peer testimonies

- intensive data analysis on app usage to identify pitfalls in user journeys, and provide customised support to different types of users

Value stream 2 : leveraging on data to extend our client’s offer to new territories

1. Iterating over a Business Model Canvas to define a new line of services

Our client’s strategic ambition was not only to leverage the data to transform its operations, it also encompassed building a new line of services completing its existing offer. From a very traditional business, the vision was to become a key player in the real-estate tech business. Such transformations don’t happen overnight.

We helped the management team to define the value proposition of such new services, especially iterating over a Business Model Canvas. A cycle of workshops were conducted, and assumptions systematically challenged during future clients’ interviews.

After several pivot, we formalised two main value propositions, detailed in problem specification blueprints for data scientists to work on.

2. Fast-prototyping algorithms to deal partnerships with external data providers

Developing efficient machine-learned algorithms requires data. Organisations always sit on various sources of (more or less easily) available data. But often, it’s in the use of several sources from different owners that brings the best opportunities.

To develop our algorithms we needed more data than immediately available. We needed partners. To get partners, we needed something to be used for bartering. Chicken-and-egg situation. We decided to start by fast prototyping our target algorithms with the data we could get access to, and build a prototype UI to make anyone try it. This helped us showcase our use case to future data providing partners (being honest about current performances), thanks to whom we could make our service more reliable. This approach was completely new to our client, more in a “build, then pitch” scheme than “fake it until you make it” mindset.

3. Drawing non-technical business experts into iterations over algorithms’ performance

A key challenge we faced is that we needed experts’ feedback and knowledge to improve the algorithms. But how to engage businesses in such tech-intensive matters?

The first solution was to use the prototype developed to get feedback from business people following a testing plan. Then, every two weeks, a dashboard of algorithms’ performance was displayed to a focus group to gather insights from why it’d perform in certain areas, and what could explain its pitfalls. This fuelled a feature engineering backlog for future sprints. Finally, a trick we used was to give access to the algorithm output directly through the business application developed in the first project, and add some gamification features to encourage experts to systematically use the algorithm and assess its performance.

Involving business experts in iterations over algorithms was definitely a major source of performance gain. It also served as a change management tool, making business people get acquainted to the intelligent system up to making it their own.

Results

Thanks to a user-centric approach, agile methodologies and our breakthrough mindset, we proved that it’s possible to digitize and transform a traditional business. We helped our client to stay competitive in its industry. In only 6 months, we designed, built and deployed :

- a new digital tool available to all business lines to search and get access to data (internal, partners’, web, open data), with the promise of driving significant improvement in operations’ efficiency.

- a new data-intensive service available to our client’s customers through API, soon to be commercialized as a white label service for both B2C and B2B users.

- a data-oriented architecture collecting, processing data from always more sources and external providers, and exposing them in a cloud.